Automatically generating code from simulation models is a key development activity in Model-Based Design that inherently reduces the time and effort teams spend on hand coding. Successful deployment to a high performance embedded system requires production of extremely efficient code. Code efficiency objectives include minimizing memory usage and maximizing execution speed. Successful deployment for military and defense systems also requires the ability to rigorously verify the code. Code verification objectives include compliance to requirements and conformance to standards.

This article describes how to measure code efficiency and perform code verification activities using MATLAB and Simulink product family Release 2011b, featuring the Embedded Coder for flight code generation. The development and verification activities discussed are intended to satisfy DO-178B and upcoming DO-178C objectives, including the Model-Based Development and Verification supplement planned for release with the DO-178C update. Not every tool or DO-178 objective is examined; rather, the focus of this article is on new technologies. Qualification kits are available for the described verification tools

Source Code Assessment

Code Efficiency

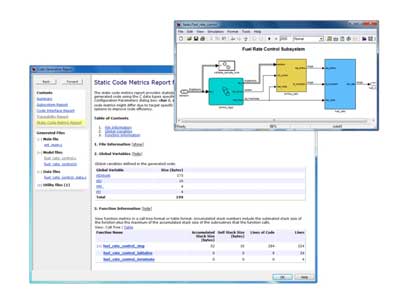

Code efficiency metrics are divided into two broad categories. The first measures memory usage in terms of RAM, ROM, and stack size; the second measures execution cycle counts or speed. Embedded Coder helps software engineers analyze and optimize the memory footprint of generated code by producing a code metrics report after code generation. This report shows Lines of code, global RAM and stack size based on a static analysis of the source code and knowledge of the target hardware characteristics, such as integer word sizes. The analysis is static because it does not take into account the cross-compilation and execution of the code. This allows engineers to perform a quick pass for optimizing memory usage based on the source code, for example, by trying different data types or modifying logic in the model. However, the next analysis and optimization phase would require the full embedded tool chain for on-board memory utilization and execution time assessment, as described in Executable Object Code Assessment below.

Figure 1: Static Code Metrics Report

Code Verification

Source code verification relies heavily on code reviews and requirements traceability analysis. A new product from MathWorks, Simulink Code Inspector, automatically performs a structural analysis of the generated source code and assesses its compliance with the low-level requirements model. The inspection checks if every line of code has a corresponding element or block in the model. Likewise, it checks elements in the model to determine whether they are structurally equivalent to operations, operators, and data in the generated code. It then produces a detailed model-to-code and code-to-model traceability analysis report.

Figure 2: Simulink Code Inspector Report

Additional source code verification activities include ensuring compliance with industry code standards such as the MISRA AC AGC: Guidelines for the application of MISRA-C:2004 in the context of automatic code generation. With the R2011a release, Embedded Coder introduced a code generation objective that lets developers influence the code generator output based on the MISRA-C standard. MISRA-C analysis tools can then be applied to check the code. For example Polyspace code verifier products analyze code for MISRA AC AGC and MISRA-C:2004. Polyspace can also determine if the code has run-time errors such as divide-by-zero and array-out-of-bound conditions. Simulink Code Inspector with Polyspace can be used to address all code verification objectives in DO-178 Table A5 involving source code analysis. Objectives such as worst case execution time would need to use the executable object code and additional techniques and tools such as those described below.

Figure 3: MISRA-C:2004 Code Generation Objective Specification

Executable Object Code Assessment

Code Efficiency

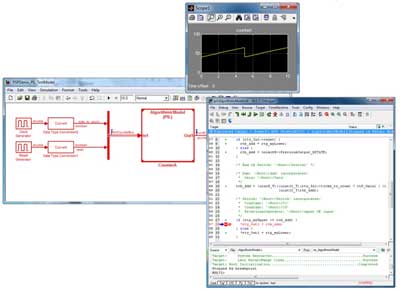

Simulink supports executable object code verification with profiling analysis using software-in-the-loop (SIL) and processor-in-the-loop (PIL) testing. With SIL testing, the generated code is compiled and run on the host computer for quick assessment of the code’s execution using test data provided by Simulink, which serves as the test harness. With PIL testing, the generated code is cross-compiled into executable object code (EOC) and run on the actual flight processor or instruction set simulator, again with Simulink as the test harness in-the-loop.

Embedded Coder supports PIL testing for bare board or RTOS execution on any embedded processor using customizable APIs and reference implementations. One example implementation available for view and download uses Green Hills MULTI IDE and Integrity RTOS with a Freescale MPC8620 processor.

Figure 4: Verifying Executable Object Code using PIL Testing

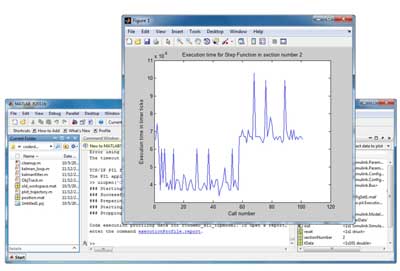

A code profile execution report is generated during PIL testing for assessing bottlenecks and optimizing designs, for example by using code replacement technologies that substitute single instruction, multiple data (SIMD) and Intel Integrated Performance Primitives (IPP) optimizations for default ANSI/ISO C generated code. MATLAB can generate plots from the code profile execution data for further analysis. DO-178 and related standards require that the complex flight software is verified on the complex flight hardware, making PIL testing a critical verification activity for high-integrity systems.

Figure 5: Profiling Execution Cycles using MATLAB

Code Verification

With Model-Based Design, the same requirements-based simulation test cases used for verifying the model can be reused for SIL and PIL testing. Engineers can apply the same input data used in the model simulations, and then compare SIL and PIL test results with the model simulation results to determine if they are numerically equivalent using the Simulink Simulation Data Inspector.

Figure 6: Comparing Simulation and PIL Test Results using Simulation Data Inspector

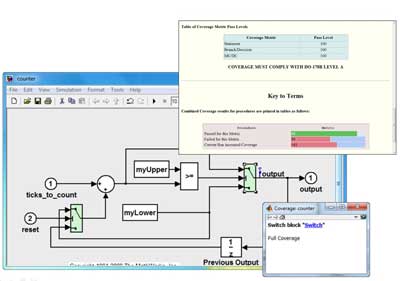

DO-178B also requires structural coverage analysis of the software, including Modified Condition/Decision Coverage (MC/DC), to assess if the code is fully exercised during testing. An analogous concept at the model level, Model Coverage, is provided by Simulink Verification and Validation to assess if the model was fully exercised. Together, model and code coverage analysis detect potential errors in design, implementation, and testing. Simulink Verification and Validation provides model coverage analysis. In R2011b, Embedded Coder integrates with LDRA Testbed for code coverage and additional DO-178 workflow support.

Figure 7: Measuring Model and Code Coverage using Simulink Model Coverage Tool and LDRA Testbed

In summary, Model-Based Design enables automatic generation of flight code that is both efficient and easily verified at the model, source code, and executable object code levels. By focusing development and verification on Simulink models and simulation test cases in a way that enables model and test reuse to help satisfy DO-178B and DO-178C software objectives, companies can significantly reduce costs and improve time-to-market. Integrations and published APIs enable total solutions with third-party tools used in DO-178 development projects. Complementing these software development process improvements, Simulink offers additional advantages with its support for systems engineering and related standards (for example, ARP 4754) as well as hardware development and related standards (for example, DO-254) when used with MathWorks products for physical modeling of systems, hardware-in-the-loop (HIL) testing, and HDL code generation for FPGAs.

***

MATLAB and Simulink are registered trademarks of The MathWorks, Inc. See www.mathworks.com/trademarks for a list of additional trademarks. Other product or brand names may be trademarks or registered trademarks of their respective holders.

About the author:

Tom Erkkinen is the Embedded Application Manager at MathWorks. He is leading a corporate initiative to foster industry adoption of embedded code generation technologies. Before joining MathWorks, Tom worked at Lockheed-Martin developing a variety of control algorithms and real-time software, including space shuttle robotics for NASA JSC. He has spent more than fifteen years helping hundreds of companies deploy Model-Based Design with embedded code generation. Tom holds a B.S. degree in Aerospace Engineering from Boston University and a M.S. degree in Mechanical Engineering from Santa Clara University.